Category LLM

StarCoder2 is a family of code generation models (3B, 7B, and 15B), trained on 600+ programming languages from The Stack v2 and some natural language text such as Wikipedia, Arxiv, and GitHub issues. The models use Grouped Query Attention, a context window… Continue Reading →

In this example, we’ll imagine that our chatbot needs to answer questions about the content of a website. To do that, we’ll need a way to store and access that information when the chatbot generates its response. Source: Building a… Continue Reading →

Goal: better, more focused search for www.cali.org. In general the plan is to scrape the site to a vector database, enable embeddings of the vector db in Llama 2, provide API endpoints to search/find things. Hints and pointers. Llama2-webui –… Continue Reading →

The longer holiday weekend edition. Opportunities and Risks of LLMs for Scalable Deliberation with Polis — Polis is a platform that leverages machine intelligence to scale up deliberative processes. In this paper, we explore the opportunities and risks associated with… Continue Reading →

Large language models (LLM) are notoriously huge and expensive to work with. An LLM requires a lot of specialized hardware to train and manipulate. We’ve seen efforts to transform and quantize the models that result in smaller footprints and models… Continue Reading →

LLMs promise to fundamentally change how we use AI across all industries. However, actually serving these models is challenging and can be surprisingly slow even on expensive hardware. Today we are excited to introduce vLLM, an open-source library for fast… Continue Reading →

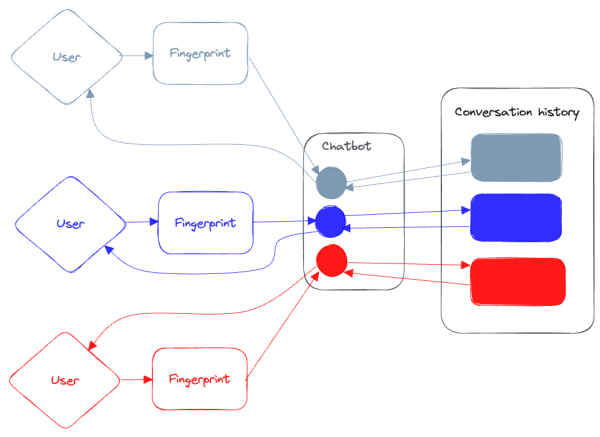

Large language models are a powerful new primitive for building software. But since they are so new—and behave so differently from normal computing resources—it’s not always obvious how to use them.In this post, we’re sharing a reference architecture for the… Continue Reading →

Tutorial – train your own llama.cpp mini-ggml-model from scratch! by u/Evening_Ad6637 in LocalLLaMA Here I show how to train with llama.cpp your mini ggml model from scratch! these are currently very small models (20 mb when quantized) and I think… Continue Reading →