Recent advancements in machine learning (ML) have unlocked opportunities for customers across organizations of all sizes and industries to reinvent new products and transform their businesses. However, the growth in demand for GPU capacity to train, fine-tune, experiment, and inference these ML models has outpaced industry-wide supply, making GPUs a scarce resource. Access to GPU capacity is an obstacle for customers whose capacity needs fluctuate depending on the research and development phase they’re in.

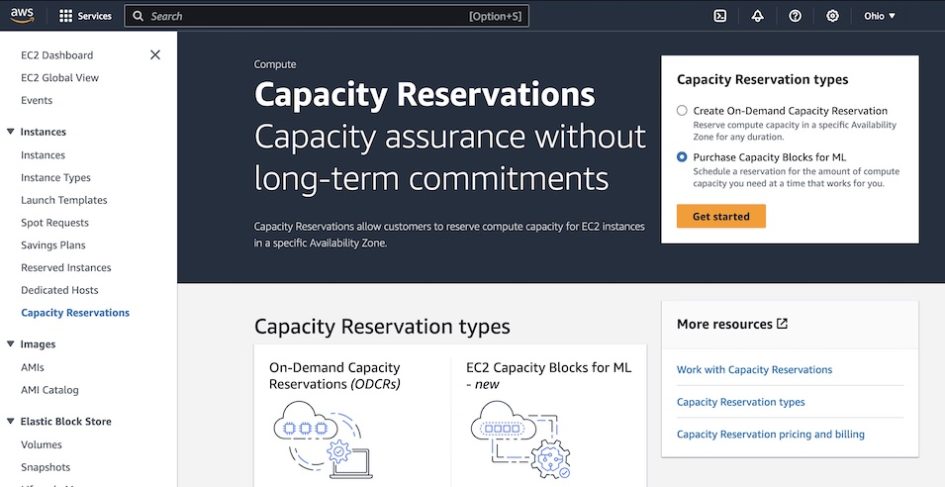

Today, we are announcing Amazon Elastic Compute Cloud (Amazon EC2) Capacity Blocks for ML, a new Amazon EC2 usage model that further democratizes ML by making it easy to access GPU instances to train and deploy ML and generative AI models. With EC2 Capacity Blocks, you can reserve hundreds of GPUs collocated in EC2 UltraClusters designed for high-performance ML workloads, using Elastic Fabric Adapter (EFA) networking in a peta-bit scale non-blocking network, to deliver the best